For BMS, BUS, Industrial, Instrumentation Cable.

Elon Musk and the xAI team officially launched the latest version of Grok, Grok3, during a livestream. Prior to this event, a significant amount of related information, coupled with Musk's 24/7 promotional hype, raised global expectations for Grok3 to unprecedented levels. Just a week ago, Musk confidently stated during a livestream while commenting on DeepSeek R1, "xAI is about to launch a better AI model." From the data presented live, Grok3 has reportedly surpassed all current mainstream models in benchmarks for mathematics, science, and programming, with Musk even claiming that Grok3 will be used for computational tasks related to SpaceX's Mars missions, predicting "breakthroughs at the Nobel Prize level within three years." However, these are currently just Musk's assertions. After the launch, I tested the latest beta version of Grok3 and posed the classic trick question for large models: "Which is larger, 9.11 or 9.9?" Regrettably, without any qualifiers or markings, the so-called smartest Grok3 still could not answer this question correctly. Grok3 failed to accurately identify the meaning of the question.

This test quickly drew considerable attention from many friends, and coincidentally, various similar tests overseas have shown Grok3 struggling with basic physics/mathematics questions like "Which ball falls first from the Leaning Tower of Pisa?" Thus, it has been humorously labeled as "a genius unwilling to answer simple questions."

Grok3 is good, but it’s not better than R1 or o1-Pro.

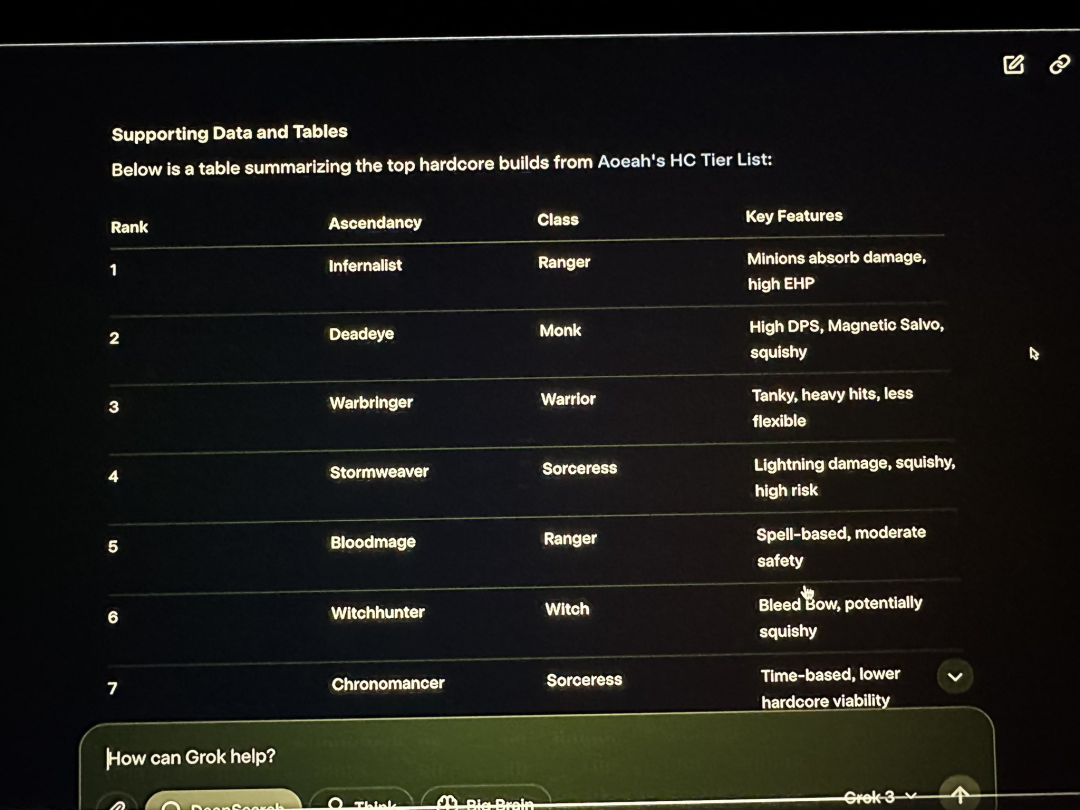

Grok3 experienced "failures" on many common knowledge tests in practice. During the xAI launch event, Musk demonstrated using Grok3 to analyze the character classes and effects from the game Path of Exile 2, which he claimed to play often, but most of the answers provided by Grok3 were incorrect. Musk during the livestream did not notice this obvious issue.

This mistake not only provided further evidence for overseas netizens to mock Musk for "finding a substitute" in gaming but also raised significant concerns regarding Grok3's reliability in practical applications. For such a "genius," regardless of its actual capabilities, its reliability in extremely complex application scenarios, such as Mars exploration tasks, remains in doubt.

Currently, many testers who received access to Grok3 weeks ago, and those who just tested the model capabilities for a few hours yesterday, all point to a common conclusion: "Grok3 is good, but it’s not better than R1 or o1-Pro."

A Critical Perspective on "Disrupting Nvidia"

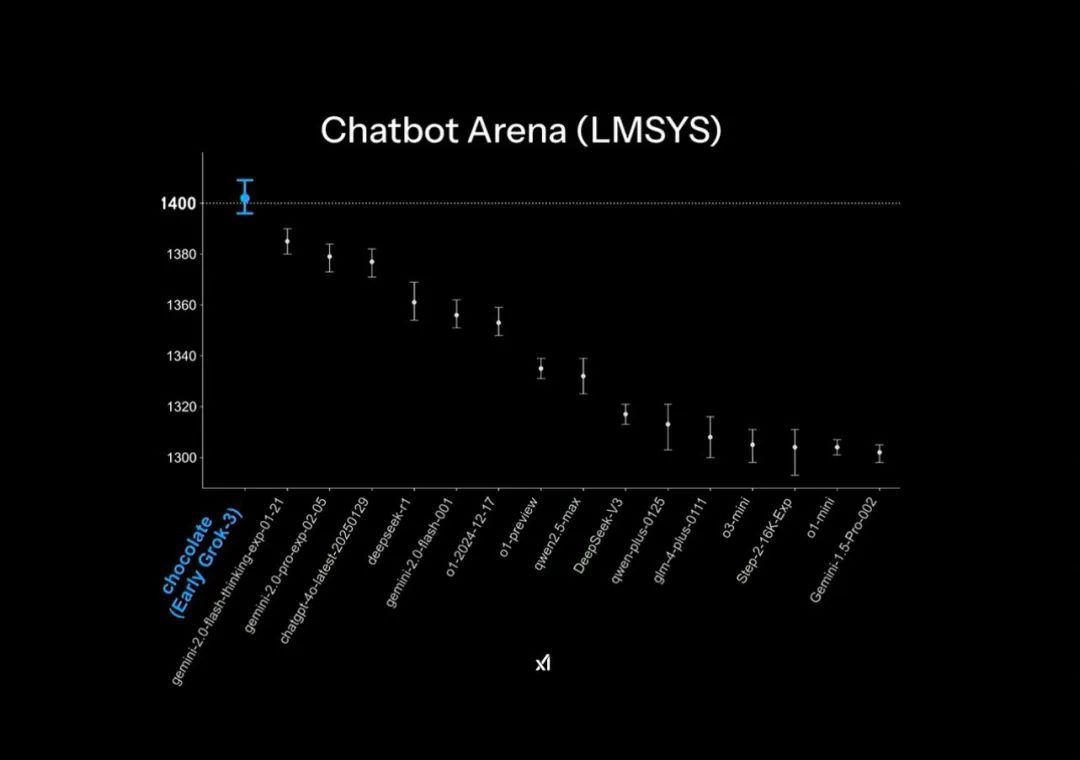

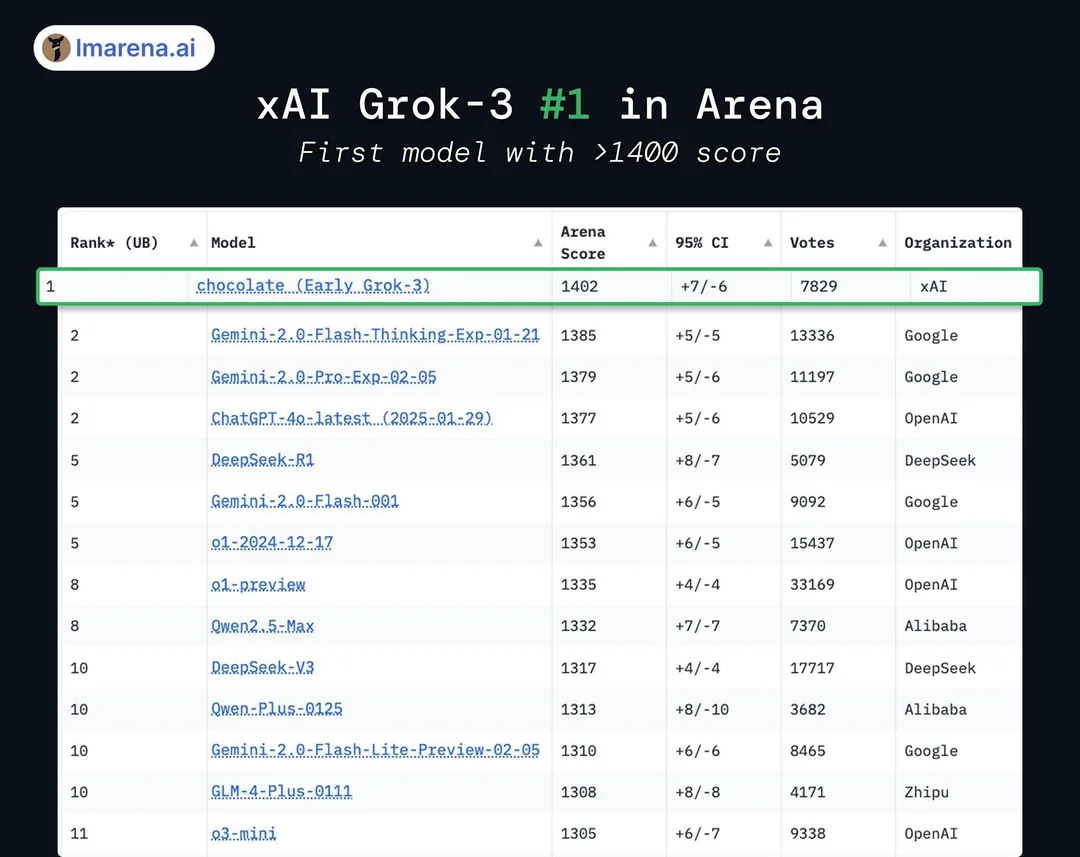

In the officially presented PPT during the release, Grok3 was shown to be “far ahead” in the Chatbot Arena, but this cleverly used graphic techniques: the vertical axis on the leaderboard only listed results in the 1400-1300 score range, making the original 1% difference in test results appear exceptionally significant in this presentation.

In actual model scoring results, Grok3 is just 1-2% ahead of DeepSeek R1 and GPT-4.0, which corresponds to many users' experiences in practical tests that found "no noticeable difference." Grok3 only exceeds its successors by 1%-2%.

Although Grok3 has scored higher than all currently publicly tested models, many do not take this seriously: after all, xAI has previously been criticized for "score manipulation" in the Grok2 era. As the leaderboard penalized answer length style, the scores greatly decreased, leading industry insiders to often criticize the phenomenon of "high scoring but low ability."

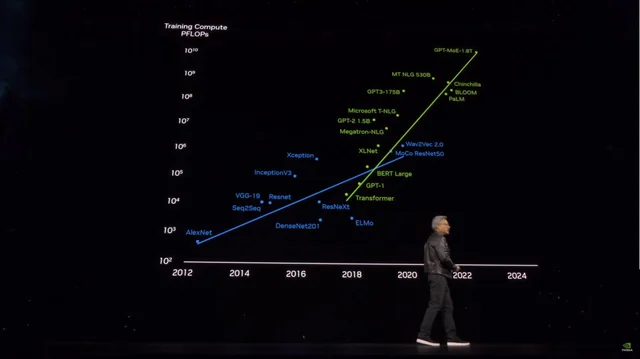

Whether through leaderboard "manipulation" or design tricks in illustrations, they reveal xAI and Musk's obsession with the notion of "leading the pack" in model capabilities. Musk paid a steep price for these margins: during the launch, he boasted of using 200,000 H100 GPUs (claiming "over 100,000" during the livestream) and achieving a total training time of 200 million hours. This led some to believe it represents another significant boon for the GPU industry and to consider DeepSeek's impact on the sector as "foolish." Notably, some believe that sheer computational power will be the future of model training.

However, some netizens compared the consumption of 2000 H800 GPUs over two months to produce DeepSeek V3, calculating that Grok3’s actual training power consumption is 263 times that of V3. The gap between DeepSeek V3, which scored 1402 points, and Grok3 is just under 100 points. Following the release of this data, many quickly realized that behind Grok3's title as the "world's strongest" lies a clear marginal utility effect—the logic of larger models generating stronger performance has begun to show diminishing returns.

Even with "high scoring but low ability," Grok2 had vast amounts of high-quality first-party data from the X (Twitter) platform to support usage. However, in the training of Grok3, xAI naturally encountered the "ceiling" that OpenAI currently faces—the lack of premium training data swiftly exposes the marginal utility of the model's capabilities.

The developers of Grok3 and Musk are likely the first to understand and identify these facts deeply, which is why Musk has continually mentioned on social media that the version users are experiencing now is "still just the beta" and that "the full version will be released in the coming months.” Musk has taken on the role of Grok3's product manager, suggesting users provide feedback on various issues encountered in the comments section. He might be the most followed product manager on Earth.

Yet, within a day, Grok3's performance undoubtedly raised alarms for those hoping to rely on "massive computational muscle" to train stronger large models: based on publicly available Microsoft information, OpenAI's GPT-4 has a parameter size of 1.8 trillion parameters, over ten times that of GPT-3. Rumors suggest that the parameter size of GPT-4.5 might be even larger.

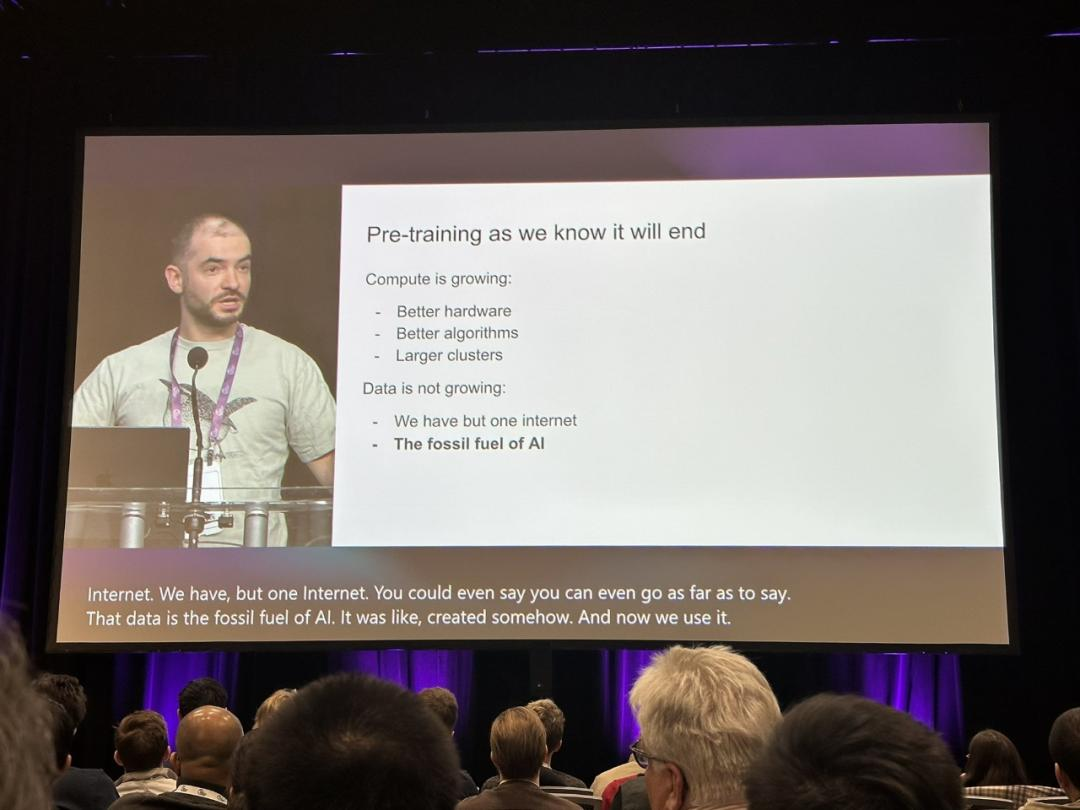

As the model parameter sizes soar, the training costs are also skyrocketing. With Grok3's presence, contenders like GPT-4.5 and others that wish to continue “burning money” to achieve better model performance through parameter size must consider the ceiling that is now clearly in sight and contemplate how to overcome it. At this moment, Ilya Sutskever, former chief scientist at OpenAI, had previously stated last December, "The pre-training we are familiar with will come to an end," which has resurfaced in discussions, prompting efforts to find the true path for training large models.

Ilya’s viewpoint has sounded the alarm in the industry. He accurately foresaw the imminent exhaustion of accessible new data, leading to a situation where performance cannot continue to be enhanced through data acquisition, likening it to the exhaustion of fossil fuels. He indicated that "like oil, human-generated content on the internet is a limited resource." In Sutskever's predictions, the next generation of models, post-pre-training, will possess "true autonomy" and reasoning capabilities "similar to the human brain."

Unlike today's pre-trained models that primarily rely on content matching (based on the previously learned model content), future AI systems will be able to learn and establish methodologies to solve problems in a manner akin to the "thinking" of the human brain. A human can achieve fundamental proficiency in a subject with just basic professional literature, while an AI large model requires millions of data points to achieve just the most basic entry-level efficacy. Even when the wording is changed slightly, these fundamental questions may not be correctly understood, illustrating that the model has not genuinely improved in intelligence: the basic yet unsolvable questions mentioned at the beginning of the article represent a clear example of this phenomenon.

Conclusion

However, beyond brute force, if Grok3 indeed succeeds in revealing to the industry that "pre-trained models are approaching their end," it would carry significant implications for the field.

Perhaps after the frenzy surrounding Grok3 gradually subsides, we will witness more cases like Fei-Fei Li's example of "tuning high-performance models on a specific dataset for just $50," ultimately discovering the true path to AGI.

Control Cables

Structured Cabling System

Network&Data, Fiber-Optic Cable, Patch Cord, Modules, Faceplate

Apr.16th-18th, 2024 Middle-East-Energy in Dubai

Apr.16th-18th, 2024 Securika in Moscow

May.9th, 2024 NEW PRODUCTS & TECHNOLOGIES LAUNCH EVENT in Shanghai

Oct.22nd-25th, 2024 SECURITY CHINA in Beijing

Nov.19-20, 2024 CONNECTED WORLD KSA

Post time: Feb-19-2025